In an era where users can consume content from pretty much anywhere, OTT platforms are in fierce competition for user engagement and retention. One strategy to maximize this is through A/B testing. A/B tests aim to compare the performance of two items or variations in order to optimize the service. The best A/B test is within a controlled experiment to determine the version that will maximize impact and drive results for KPIs. To elevate a service, product teams need to be able to constantly maximize their user experience, whether that’s the user interface within their app, the use of notifications or personalization use cases.

When it comes to OTT platforms, A/B testing can assess various aspects, including UI design, navigation options, pricing models and even marketing strategies. By analyzing the impact of modifications on user behavior, providers can make informed decisions about how to enhance their platforms.

With A/B testing, you can learn what works for certain groups and gain a better understanding of user behavior to improve interactions with your platform. A/B testing is a constant activity that a team can run all the time; for example, Netflix runs over 250 A/B tests yearly, many focusing on their personalization algorithms alone. When conducting different tests, it’s vital that you measure, count and compare against your baseline to determine how each variation performs.

Get More from Your A/B Test

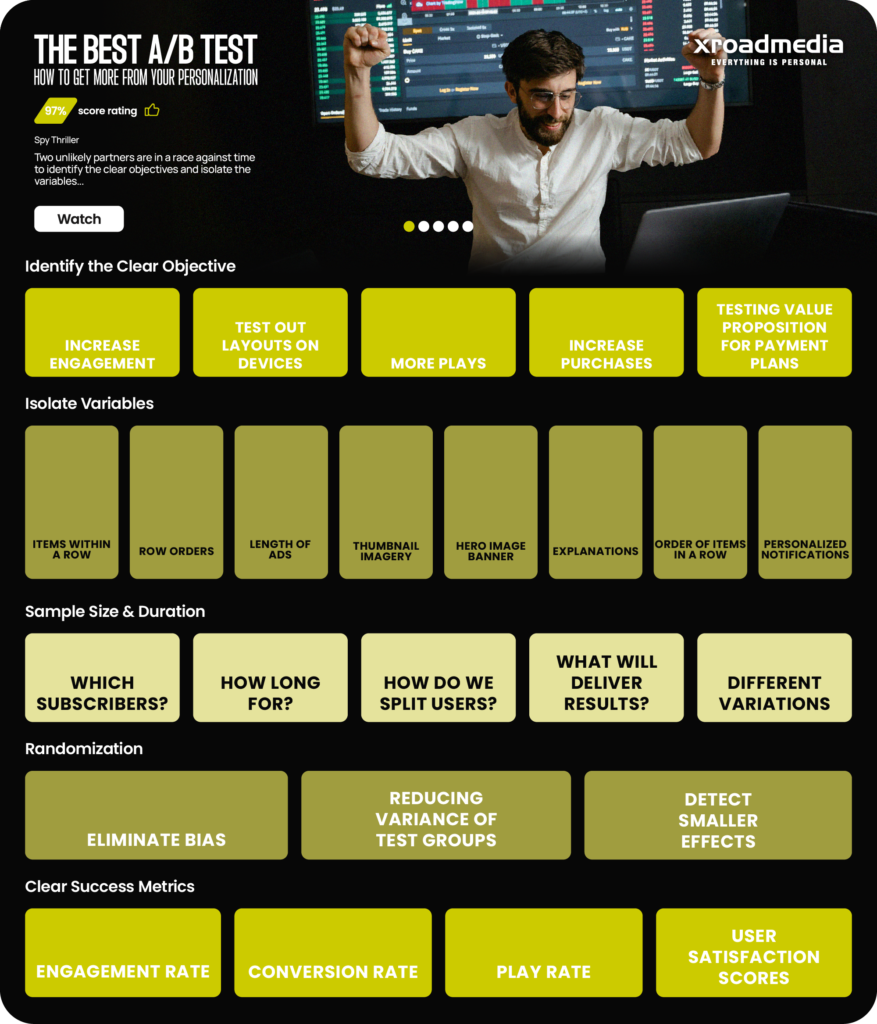

So, how can you ensure the best results? Below are some of our tips on getting the most out of your tests, whether you’re determining the length of your ads, the position of rows in the UI or, items within a row or the hero image banner on your homescreen.

1. Identify the Clear Objective

Start by defining what you want to achieve through the A/B test. It could be increasing user engagement, improving content discoverability or driving subscription conversions. The more specific the goal, the better you can tailor the test.

For example, if you want to test having diverse thumbnails on content to see if that increases clicks, to include different actors or scenes that would appeal to different audiences, the objective is engagement. According to Netflix, changing the images associated with titles has resulted in 20-30% more viewings for certain titles.

2. Isolate Variables

To accurately measure the impact of a change, it’s important to focus on one variable at a time. For example, if you want to test different call-to-action buttons, keep everything else constant, such as the content being promoted or the user interface design.

3. Sample Size and Duration

Ensure that your test includes a sufficient number of users, representative of your target audience, to obtain statistically significant results. The duration of the test must also be determined to account for seasonal variations and any potential carryover effects.

4. Randomization

Randomly assign users to either the control or experimental group to eliminate bias and ensure that the groups are comparable in terms of user characteristics. This could be based on demographic factors such as location or even the engagement levels of each of your subscriber groups.

5. Clear Success Metrics

Define KPIs that align with your objective. These could be click-through rates, viewing time, conversion rates or any other measurable metric relevant to your test. Clear success metrics will help you to evaluate the impact of the changes accurately. For example, for one of our clients, True Digital, we were co-ordinating an A/B Test with their product team with an end goal of increasing the playrate and time spent. Utilizing strategies such as moving the content rails with niche content like an anime row from the 21st position to the 5th, which resulted in increases such as 21.05% increase in plays.

Interpreting the Success of an A/B Test

Data is better than hunches; once the A/B test has been completed, the results need to be compared to define the next best steps. A/B testing is an iterative process, so it’s essential to continuously monitor and refine your personalization strategies based on the insights gained from the tests. If you don’t get the results you were hoping for, then it’s time to adapt the hypothesis and objectives to help boost your KPIs.

For product and editorial teams, A/B testing can offer first-hand results on how their audiences are receiving the product and the content. Editorial teams, particularly, may have a concern since there will be a lack of control, which may cause concern if they have certain content they want to promote. With the right tools, you have the right level of interaction and control that will still be able to conduct a fair test to see what works best for each user.

Personalization is key to boosting engagement, with content recommendations, notifications, dynamic UI and even tailored upsell offers. However, to do personalization right, it needs to be tried and tested for each group of your subscribers. By leveraging A/B testing in TV apps, you can understand what works best for your user experience and engagement, helping services tackle issues like churn.

A/B testing within OTT platforms offers a data-driven approach to optimize user experience. By conducting well-designed tests, focusing on specific variables and analyzing results accurately, providers can make informed decisions to enhance their platforms and keep users engaged in the ever-evolving world of streaming. You can improve the experience of every single user with personalization by regular testing so that your product teams can always find the most successful outcome. Not only has A/B testing proven to deliver results in the short run, but it can help plan for the future.

If you want to understand how we work with some of the top media companies to deliver the best-personalized experience to users and the most effective A/B tests, contact our experts today.